From Wrappers to Workflows: The Architecture of AI-First Apps

Paras D.

Jan 23, 2026

Building an AI demo is easy.

A controller calls an LLM API, blocks for a few seconds, and returns a response. It looks impressive in a pitch or an internal demo — until real users show up.

Building a production-grade AI application is an entirely different problem.

In the real world, AI APIs time out. They hallucinate. They fail intermittently. They get expensive fast. And users absolutely hate staring at loading spinners while your backend waits on a probabilistic system to finish thinking.

An AI-first application is not a traditional CRUD app with a chatbot bolted on. It requires a fundamental architectural shift: away from synchronous request–response flows and toward event-driven, stateful orchestration.

This is how we architect scalable AI backends at SilverSky.

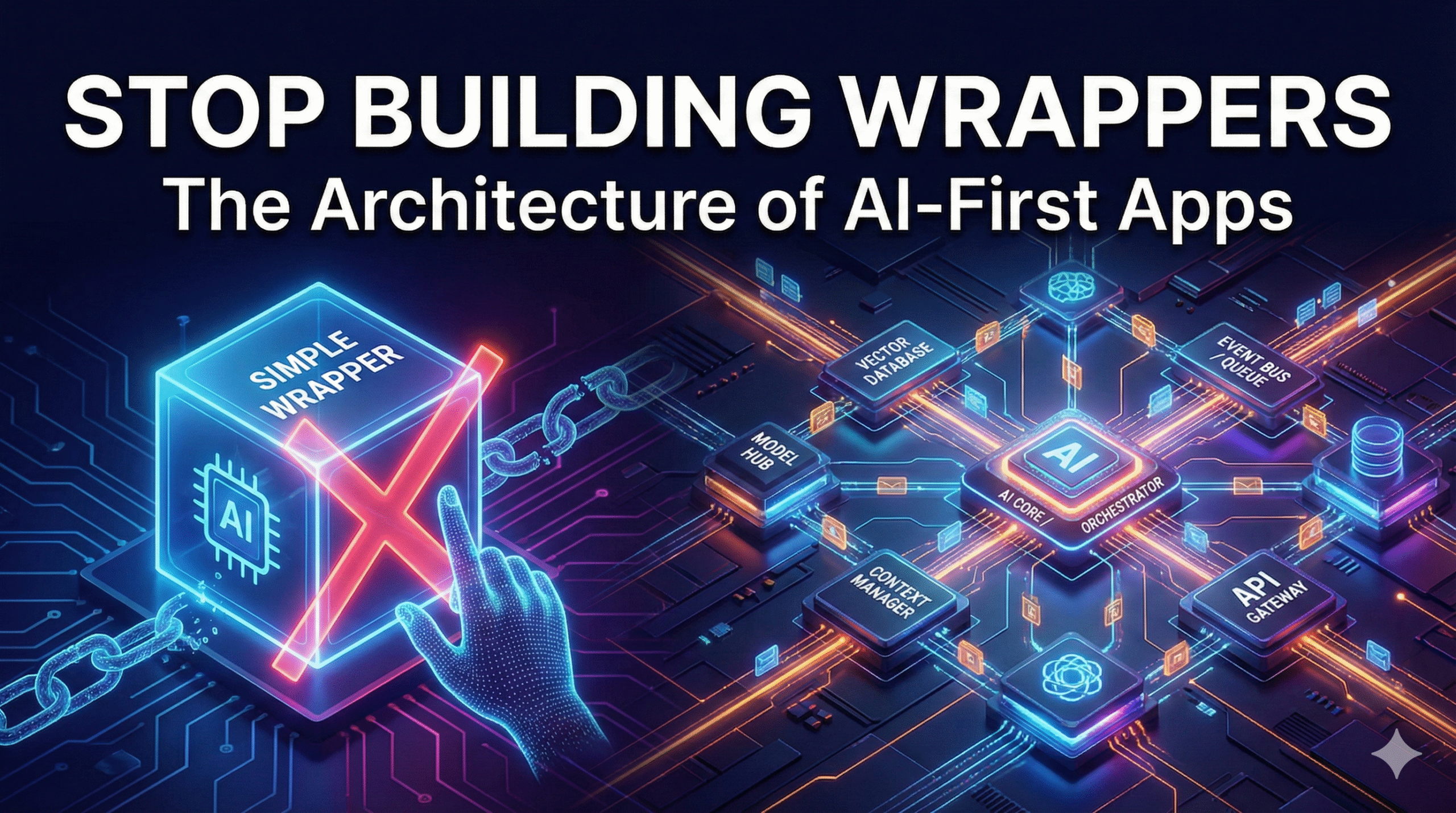

1. The Architectural Shift: From Wrappers to Orchestrators

In a standard web application, the backend is a relatively thin layer between the user and the database. Requests are short-lived, deterministic, and cheap.

In an AI application, the backend plays a very different role. It is an orchestrator.

The Wrapper Pattern (Don’t Do This)

This approach assumes:

- The model responds quickly

- The call never fails

- The output is always acceptable

- The cost is predictable

None of those assumptions hold in production.

The AI-First Architecture

Here, the backend explicitly manages:

- Long-running execution

- Retries and partial failures

- State and progress

- Cost and rate limits

- Asynchronous user feedback

Once you introduce probabilistic systems with unpredictable latency and cost, treating an LLM like a deterministic microservice is a category error. AI-first systems must be designed to expect chaos — and keep working anyway.

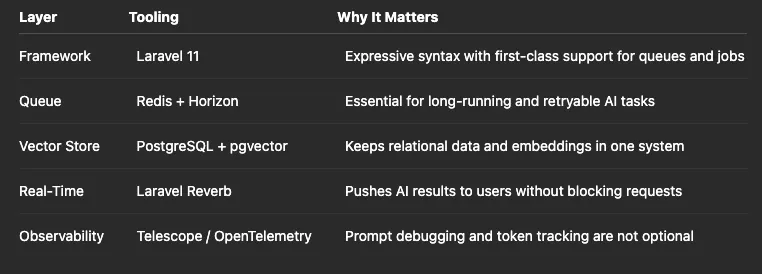

2. A Pragmatic Tech Stack for AI Backends

Laravel is surprisingly well-suited for AI workloads. Its opinionated abstractions around queues, jobs, caching, and events map cleanly to the constraints of production AI systems.

This stack favors operational simplicity over novelty — an underrated advantage when AI workloads already introduce enough complexity.

3. Never Call AI APIs from Controllers

Controllers should describe intent, not execution.

Calling an LLM directly from a controller:

- Blocks server threads

- Makes testing painful

- Couples your app to a specific provider

- Hides cost and retry logic in the worst possible place

The Anti-Pattern

The Service Layer Pattern

AI logic belongs in dedicated service classes. This makes it testable, observable, and swappable.

This pattern unlocks:

- Provider portability (OpenAI → Anthropic → local models)

- Deterministic testing

- Centralized cost controls

- Consistent retry and caching behavior

4. Latency Is the Default: Embrace Queues and WebSockets

AI is slow — by web standards.

Multi-step workflows like retrieval, summarization, and formatting regularly exceed 30–60 seconds in production. Any architecture that relies on synchronous HTTP requests will fail under that reality.

The solution is simple: move execution to the background and notify users asynchronously.

Step 1: Dispatch the Job

Step 2: Process and Broadcast

From the user’s perspective, the app feels responsive. From the backend’s perspective, nothing is blocked. This is the minimum bar for serious AI products.

5. Memory and Context: Long-Term AI Requires Vector Search

LLMs have short memories. Features like “Chat with PDF,” semantic search, or personalized assistants require retrieval-augmented generation (RAG).

We prefer PostgreSQL with pgvector over a separate vector database. For most teams, it dramatically simplifies the system: users, permissions, documents, and embeddings live together.

Dedicated vector stores have their place at massive scale, but most applications reach for them far too early. Operational simplicity is a feature.

6. Prompts Are Code

Hardcoding prompts in controller strings does not scale.

Prompts are business logic. They deserve versioning, testing, ownership, and structure — just like application code.

Treating prompts as first-class artifacts enables:

- A/B testing

- Safer iteration

- Clear ownership

- Faster debugging when outputs degrade

7. Cost Control Is Not Optional

The most common way AI startups fail isn’t bad models — it’s unbounded usage.

One infinite loop or poorly protected endpoint can generate a four-figure bill overnight. Guardrails must be built in from day one.

At a minimum:

- Enforce strict max_tokens

- Track per-user and per-tenant usage

- Implement hard limits and graceful failures

Add circuit breakers. If an AI provider starts returning errors, stop sending traffic temporarily. Protecting your system from itself is part of the job.

Final Thoughts

Building an AI-first backend isn’t about knowing how to call an API. It’s about handling everything that happens after the call.

Latency, retries, hallucinations, partial failures, user feedback, and cost controls are not edge cases. They are the core of the system.

Teams that treat AI as a feature ship demos.

Teams that treat AI as infrastructure ship products.

If you’re building an AI platform and running into timeouts, ballooning costs, or brittle workflows, we can help. At SilverSky, we specialize in production-grade AI backend architecture — systems designed to survive real users and real scale.

If you liked this post, you might also enjoy our deep dives on event-driven architectures and cost-aware system design for modern backends.